Visualizing Neural Network Training: Beyond Flat Projections

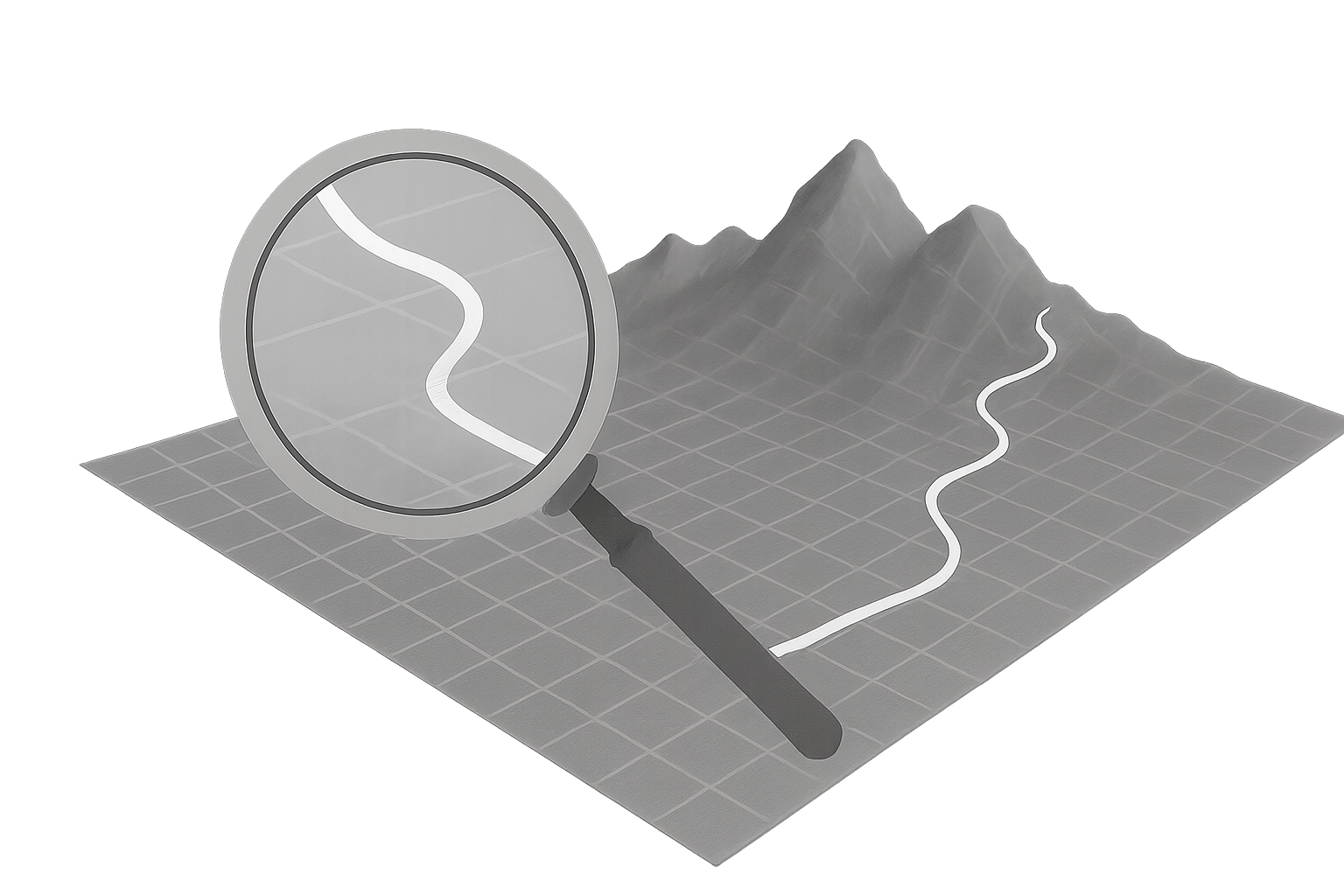

What if we could see how a neural network learns—watching it navigate hills, valleys, and decision points as it optimizes? Loss landscape visualization tries to do just that, but common methods like PCA and t-SNE often miss the twists and turns that matter most, oversimplifying the training process and missing the full picture.

Neuro-Visualizer is a non-linear auto-encoder-base approach that captures the entire training path more faithfully. Rather than zooming in only around the final model, it reconstructs the full trajectory in a low-dimensional manifold, giving us a clearer picture of how learning unfolds.

With this method, we uncovered training dynamics that traditional tools often miss—like models circling around pockets of local minima rather than settling smoothly. These details help explain why some learning strategies succeed where others fall short, particularly in physics-informed or knowledge-guided settings. In doing so, the visualizations both confirmed and challenged assumptions in existing literature.

And because Neuro-Visualizer is flexible, we can shape the view depending on what we’re curious about: zooming in where the learning gets tricky, or spreading out the steps to better follow their path. It’s like adjusting the lens—not for prettier pictures, but for better understanding.